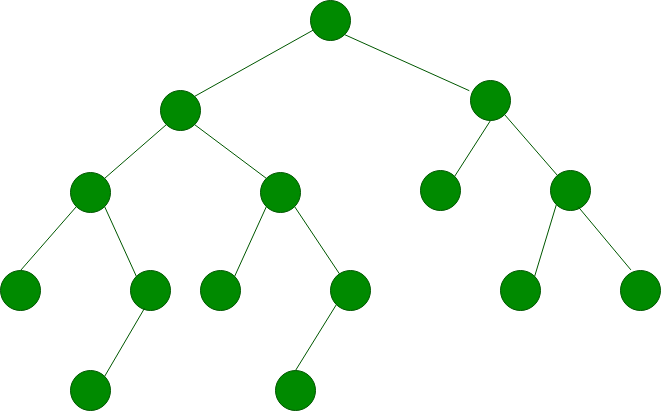

AVL Tree Data Structure is like a smart version of a normal binary search tree that knows how to stay balanced on its own. If you have ever worked with trees in coding, you know they can get messy if too many nodes pile up on one side. This makes searching, inserting, or deleting stuff slow and annoying. AVL Tree fixes that problem by automatically keeping itself balanced after every change.

You can think of it like a well managed bookshelf that shifts things around so everything stays in order. In this guide, we will explain about the AVL Tree in the simplest way possible with clear examples, diagrams, and even some Python code implementation. So whether you are a beginner or just brushing up for interviews, you are in the right place.

What is AVL Tree Data Structure?

An AVL tree data structure is a type of binary search tree that balances itself automatically after every insertion or deletion. It checks the height of its left and right sides, and if the difference is more than one, it rotates parts of the tree to make it even again. This way, it stays fast and efficient no matter how many nodes you add.

AVL stands for Adelson-Velsky and Landis, the two people who came up with this smart tree idea in 1962. It was the first self-balancing binary search tree and is still widely used in places where quick lookups and updates are needed.

What is Balanced Binary Search Tree (BST)?

A balanced binary search tree is a tree where the left and right sides of every node are almost equal in height. This means the tree does not lean too much to one side. When a tree is balanced, it helps in keeping all the operations like search, insert, and delete fast.

In a normal binary search tree, if you keep adding sorted values, the tree becomes like a linked list leaning to one side. That makes it very slow to work with. Searching something that could take forever. A balanced tree avoids this problem. It makes sure that the height of the tree stays small even if you add or remove many nodes. That’s why balanced trees are super important in coding, especially when you need things to work fast and smoothly. AVL Tree is one of the best examples of a balanced binary search tree because it fixes itself whenever it gets unbalanced.

What Makes An AVL Tree Data Structure Special?

The AVL Tree Data Structure is special because it always tries to stay balanced. Every time you insert or delete a node, the tree checks if it is still balanced. It does this by using something called a balance factor. The balance factor is the difference in height between the left and right subtrees of a node. If this difference is more than 1 or less than -1, the tree says hey I’m getting lopsided and then it fixes itself by doing a small rotation.

This self-balancing feature makes the AVL Tree fast. No matter how many nodes you add, the time to search, insert, or delete stays around O(log n) which is really good.

Unlike normal binary search trees, which can become slow if they get too tall or uneven, AVL Trees always keep themselves neat and tidy. That’s what makes them so powerful and reliable in real-world applications.

Basic Terminologies in AVL Tree Data Structure

Before we go deeper into how AVL Trees work, let us understand some basic terms. These are simple words you will see again and again when working with any tree data structure.

- Node: A node is just a box that holds a value. Each node can have a left child, a right child, or both.

- Root: The topmost node in the tree. It is where everything starts.

- Height: The height of a node is the number of edges from that node to the deepest leaf below it. A leaf node has height 0.

- Balance Factor: This is the difference between the height of the left subtree and the height of the right subtree of a node. Balance Factor = height(left) – height(right).

- Rotation: A small twist or turn in the tree to fix imbalance. It helps the tree stay balanced and fast. We will learn about types of rotations in the next section.

Rotations in AVL Tree Data Structure

| Rotation Type | When It Happens | Also Called | Fix |

| Right Rotation | Left subtree is too tall | Left Left (LL) Case | Rotate right around the unbalanced node |

| Left Rotation | Right subtree is too tall | Right Right (RR) Case | Rotate left around the unbalanced node |

| Left Right Rotation | Left child is right-heavy | Left Right (LR) Case | First left rotation on left child, then right rotation on node |

| Right Left Rotation | Right child is left-heavy | Right Left (RL) Case | First right rotation on right child, then left rotation on node |

AVL Tree Data Structure vs Binary Search Tree (BST)

| Feature | AVL Tree | Binary Search Tree (BST) |

| Balance | Always balanced using rotations | Not guaranteed to be balanced |

| Performance | O(log n) for search, insert, delete | Worst-case O(n) if unbalanced |

| Rotations | Performs rotations to maintain balance | No self-balancing, so no rotations |

| Structure | Height is strictly controlled | Can become skewed like a linked list |

| Memory Usage | Slightly more (stores balance factor) | Less, no extra data for balancing |

| Use Case | Good for frequent insert/delete with fast lookup | Simple cases where data comes sorted or nearly so |

| Complexity | More complex to implement due to balancing logic | Easier to implement |

Applications of AVL Tree Data Structure

- Used in databases to maintain sorted records with fast access.

- Helps in memory management in operating systems.

- Used in search engines for indexing and ranking.

- Useful in network routing algorithms.

- Applied in file systems for managing large directories efficiently.

Python code Implementation of AVL Tree Data Structure

In this section we will be talking how we will able to implement AVL tree data structure in python.

class Node:

def __init__(self, key):

self.key = key

self.left = None

self.right = None

self.height = 1

class AVLTree:

def get_height(self, node):

if not node:

return 0

return node.height

def get_balance(self, node):

if not node:

return 0

return self.get_height(node.left) - self.get_height(node.right)

def right_rotate(self, z):

y = z.left

T3 = y.right

y.right = z

z.left = T3

z.height = 1 + max(self.get_height(z.left), self.get_height(z.right))

y.height = 1 + max(self.get_height(y.left), self.get_height(y.right))

return y

def left_rotate(self, z):

y = z.right

T2 = y.left

y.left = z

z.right = T2

z.height = 1 + max(self.get_height(z.left), self.get_height(z.right))

y.height = 1 + max(self.get_height(y.left), self.get_height(y.right))

return y

def insert(self, root, key):

if not root:

return Node(key)

elif key < root.key:

root.left = self.insert(root.left, key)

else:

root.right = self.insert(root.right, key)

root.height = 1 + max(self.get_height(root.left), self.get_height(root.right))

balance = self.get_balance(root)

# Left Left

if balance > 1 and key < root.left.key:

return self.right_rotate(root)

# Right Right

if balance < -1 and key > root.right.key:

return self.left_rotate(root)

# Left Right

if balance > 1 and key > root.left.key:

root.left = self.left_rotate(root.left)

return self.right_rotate(root)

# Right Left

if balance < -1 and key < root.right.key:

root.right = self.right_rotate(root.right)

return self.left_rotate(root)

return root

def pre_order(self, root):

if not root:

return

print(root.key, end=" ")

self.pre_order(root.left)

self.pre_order(root.right)

# Example usage

tree = AVLTree()

root = None

for key in [10, 20, 30, 40, 50, 25]:

root = tree.insert(root, key)

print("Preorder traversal of the constructed AVL tree:")

tree.pre_order(root)

Advantages of AVL Tree Data Structure

- Maintains strict balance for guaranteed O(log n) time complexity.

- Faster lookups, insertions, and deletions compared to unbalanced trees.

- Automatically balances itself after operations using rotations.

- Efficient for real-time applications where data changes frequently.

- Prevents skewed tree structures that degrade performance.

FAQs

What is an AVL Tree?

An AVL Tree is a self-balancing binary search tree where the difference in heights between left and right subtrees (called the balance factor) is at most one for every node. It ensures efficient search, insert, and delete operations.

Why is it called AVL Tree?

It is named after its inventors, Adelson-Velsky and Landis, who introduced it in 1962. They developed the AVL Tree to maintain balance in binary search trees automatically.

How does an AVL Tree maintain balance?

An AVL Tree maintains balance by performing rotations (left, right, left-right, and right-left) whenever a node becomes unbalanced after insertion or deletion.

What is the difference between AVL Tree and BST?

BST are not sure every time that they should get balanced and also having the time complexity of O(n) worst case complexity for the operations like insertion or deletion. Whereas, AVL tree data structure is a self balancing binary search tree and comparing worst complexity comes to O(log n) worst case complexity structure.

What is the time complexity of AVL Tree operations?

All main operations such as search, insertion, and deletion in an AVL Tree have O(log n) time complexity because the tree remains balanced.

When should I use an AVL Tree?

AVL Trees are best used when you need frequent insertion and deletion with consistently fast lookup times, especially in applications like databases, memory management, and indexing. AVL Trees are based on when we need to achieve something better complexity within data structure.

Conclusion

AVL Tree is a powerful self-balancing binary search tree (BST) that ensures good performance by keeping the tree height minimal. It’s ability is to maintain balance through rotations makes it ideal for applications that require fast and quick insertions, deletions, and lookups. Understanding how AVL Trees work not only improves your grasp of data structures but also prepares you for building more efficient and scalable systems. Whether you are a student, developer, or enthusiast, mastering AVL Trees is a valuable step in your programming journey.

![Top 10 Applications of Linked List Data Structure [2025]](https://www.iquanta.in/blog/wp-content/uploads/2025/02/WhatsApp-Image-2025-02-01-at-2.48.42-PM-218x150.jpeg)