In the world of computer science, sorting algorithms are essential for managing data arrangement in a series. Whether it is about arranging names in an alphabetical order or improving search results then quicksort algorithm itself plays an important role.

We have already covered multiple algorithms like Bubble Sort, Merge Sort, Insertion Sort and many more. In this blog, we will be covering the QuickSort Algorithm, its working along with time and space complexity. Let us deep dive into comprehensive guide of understanding the Quicksort Algorithm.

What is a Quicksort Algorithm?

The Quicksort algorithm is a highly used sorting algorithm that follows the divide and conquer rule. This algorithm was developed by British computer scientist Tony Hoare in 1959. This Algorithm is famous for its fast performance, efficiency in working with large datasets. It works by selecting a pivot element from the array data structure and then dividing the other elements into two sub arrays: First one with elements less than or equal to the pivot and another with elements greater than the pivot.

These sub-arrays are then recursively sorted using the same process. The result is a sorted array and it build’s by combining the sorted sub-arrays with the pivot element which we are using in QuickSort algorithm. Unlike other sorting algorithms, QuickSort algorithm performs sorting in place and it requires minimal extra memory. This makes it particularly useful when we talk about memory focused approach.

How QuickSort Algorithm Works?

The QuickSort algorithm sorts an array by breaking it down into smaller parts and sorting those parts one by one as it is a classic example of the divide and conquer process. Here is a detailed step by step explanation of how quicksort algorithm works.

Choosing the Pivot Element

First step is to choose the pivot element and it is the key element that helps us to divide the array into two parts. There are multiple strategies to choose the pivot element:

- First element: Easy but sometimes leads to poor performance on sorted or nearly sorted arrays.

- Last element: Commonly used because it simplifies implementation part in quicksort algorithm.

- Random element: Help in avoiding worst case performance by making pivot selection unsure.

- Median element: Choosing the median as pivot often yields balanced partitions but requires extra effort.

Partitioning the Array Around the Pivot

Once the pivot element is selected, the array is reorganized (partitioned) so that all elements less than or equal to the pivot are placed before it. Post all elements greater than the pivot are placed after it. This step ensures that the pivot is in it’s correct position in the sorted array. Partitioning is done in-place which means all elements are swapped within the original array to avoid extra memory usage.

Recursively Sorting the Sub Arrays

After partitioning step has been done in quicksort algorithm, the array is divided into two sub arrays. The left sub array contains elements less than or equal to the pivot and the right sub array contains elements greater than the pivot element in quicksort algorithm. The QuickSort algorithm is then recursively applied to these sub arrays. Each recursive call repeats the process: selecting a pivot, partitioned, and then further dividing until the sub arrays are of size 1 or 0, which needs to be sorted.

Implementation of QuickSort Algorithm

def quick_sort(arr):

# Base case: if the list has 0 or 1 elements, it's already sorted

if len(arr) <= 1:

return arr

# Choosing the pivot as the middle element (similar to diagram: 45, then 25, 12, etc.)

pivot = arr[0]

# Partitioning the array into three parts

left = [x for x in arr[1:] if x < pivot] # Elements less than pivot

right = [x for x in arr[1:] if x > pivot] # Elements greater than pivot

# Recursively applying quick sort on the left and right parts

return quick_sort(left) + [pivot] + quick_sort(right)

# Test the function with the array from the diagram

arr = [45, 67, 12, 8, 89, 25]

sorted_arr = quick_sort(arr)

print("Sorted Array:", sorted_arr)

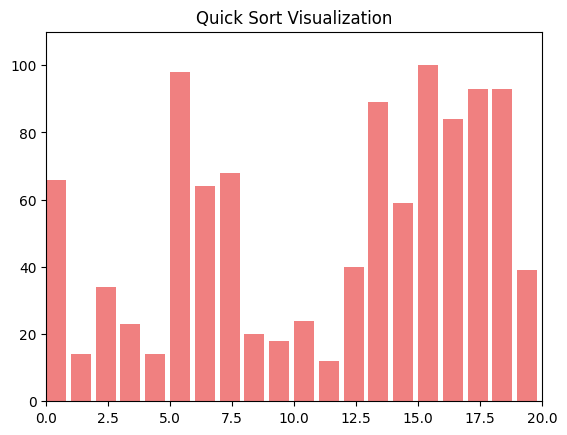

Start with pivot 45

Split into: <45: [25, 12, 8], >45: [89, 67]

Recursion on [25, 12, 8] with pivot 25, and [89, 67] with pivot 89

Continue until individual elements are sorted

Merge back the sorted parts

Complexity Analysis of QuickSort Algorithm

Best Case: O(n log n)

- Happens when the pivot divides the array into two equal parts.

- Each level of recursion works on half the array, and there are log n levels.

- Total work = n (per level) × log n (levels) = O(n log n)

Average Case: O(n log n)

- This is the most common case when elements are randomly distributed.

- The pivot usually divides the array fairly evenly.

- Performance remains efficient and close to the best case.

Worst Case: O(n²)

- Happens when the pivot is always the smallest or largest element (like in already sorted arrays).

- The array is divided into one big and one empty part at each step.

- It requires n levels, each doing n work O(n × n) = O(n²)

| Algorithm | Best Case Time | Average Case Time | Worst Case Time | Space Complexity | Stability |

| QuickSort | O(n log n) | O(n log n) | O(n²) | O(log n) | No |

| Merge Sort | O(n log n) | O(n log n) | O(n log n) | O(n) | Yes |

| Heap Sort | O(n log n) | O(n log n) | O(n log n) | O(1) | No |

| Bubble Sort | O(n) | O(n²) | O(n²) | O(1) | Yes |

| Insertion Sort | O(n) | O(n²) | O(n²) | O(1) | Yes |

| Selection Sort | O(n²) | O(n²) | O(n²) | O(1) | No |

| Timsort (Python’s sort) | O(n) | O(n log n) | O(n log n) | O(n) | Yes |

Advantages of QuickSort Algorithm

- QuickSort is very fast on average and works efficiently even for large lists of data.

- It uses very little extra memory because it sorts the list in the same place without needing another array.

- It handles big data well and usually performs better than other sorting methods like Bubble Sort or Insertion Sort.

- It works faster on modern computers because it makes good use of the system’s cache memory.

- It follows a divide-and-conquer method that is making it easy to solve the problem step by step and even run parts in parallel.

Disadvantages of Quicksort Algorithm

- QuickSort can be slow in the worst case especially if the pivot is always the smallest or largest element.

- It may not be stable, which means the original order of equal elements might not be preserved.

- It uses recursion, so if the list is too large then it can cause a stack overflow error.

- Choosing a bad pivot can lead to poor performance and unbalanced partitions.

- It is harder to implement correctly compared to simpler sorting algorithms like Bubble Sort or Insertion Sort.

Applications of QuickSort Algorithm

Here are some common and practical applications of QuickSort Algorithm:

- Sorting large datasets in computer programs, because QuickSort is fast and memory-efficient.

- Used in programming libraries like Python’s sort() (internally uses Timsort, but based on QuickSort ideas) and C/C++ standard libraries.

- Database systems use QuickSort to quickly sort query results before displaying them.

- In file systems, for sorting names or files alphabetically or by size.

- Used in competitive programming because of its high speed and efficiency on average.

FAQs on QuickSort Algorithm

What is QuickSort Algorithm?

QuickSort is a divide-and-conquer sorting algorithm that selects a pivot element and partitions the array around the pivot, placing smaller elements before it and larger elements after it. The process is recursively repeated for sub-arrays.

Why is it called “Quick” sort?

It is called “Quick” because it performs well on average and typically sorts large datasets faster than other algorithms like Bubble Sort or Insertion Sort.

How does QuickSort Algorithm works?

QuickSort involves choosing a pivot, partitioning the array such that elements less than the pivot come before it and greater after and then recursively applying the same logic to sub arrays.

What is pivot element in QuickSort Algorithm?

A pivot is an element chosen from the array to divide it into two partitions. There are some common strategies that we are applying to the quicksort algorithm which involves first element, last element, random element and median of three.

What is the time complexity of QuickSort Algorithm?

- Best Case: O(n log n)

- Average Case: O(n log n)

- Worst Case: O(n²), usually when the smallest or largest element is always picked as the pivot.

What is the space complexity of QuickSort Algorithm?

QuickSort is an in-place sorting algorithm, meaning it doesn’t require extra memory, so its space complexity is O(log n) due to recursive stack calls.

Is QuickSort Algorithm stable?

No, QuickSort Algorithm is not a stable sort. Equal elements may not get their original order unless a stable part is implemented.

![Top 10 Applications of Linked List Data Structure [2025]](https://www.iquanta.in/blog/wp-content/uploads/2025/02/WhatsApp-Image-2025-02-01-at-2.48.42-PM-218x150.jpeg)